High performance Analog-to-Digital Converters (ADCs) are tedious to design, as these building blocks are sensitive to many circuit level impairments. Due to years of research in this domains, the power efficiency of these converters has been improved with orders of magnitude. However, improving performance of these converters has become more and more difficult because fundamental limits are approached.

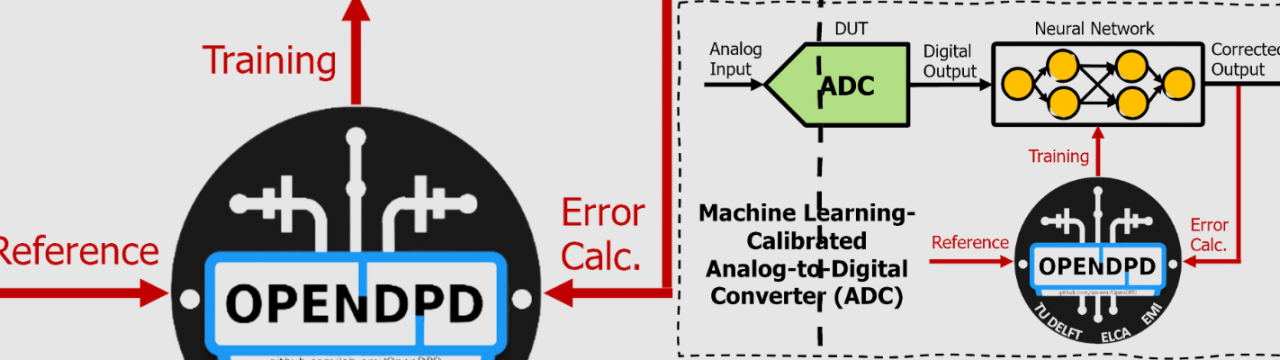

This project aims to develop machine learning algorithms that can calibrate ADCs that are optimized for noise only, and all impairments are calibrated by the machine learning algorithms. Also, it will investigate what are the implementation cost of these algorithms, and how to balance performance and implementation cost.